New CodeProject.AI License Plate Recognition (YOLO11) Module

- Thread starter MikeLud1

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

kennethpro01

n3wb

- Mar 31, 2025

- 24

- 3

Awesome!I should have it ready by the end of week.

Also got the same result now after reinstalling/removing files everything including code project ai (its necessary in my case since I got an error with permission modifying files). Thanks for the help.I ran a test with you image and the only misread I am getting is the I is being read as as a 1. Can you post the module Info

View attachment 229788

View attachment 229787

IReallyLikePizza2

Known around here

View attachment 229784

Sample Image from Google

View attachment 229785

Result

View attachment 229783

I see 7 people downloaded the appsettings.json file, I am curious on how the new module is working for everyone.

Skinny1

Getting the hang of it

I've had it going for about 24 hours and it is working great. I think it is more accurate. It is also picking up everything on a vehicle such as phone numbers, advertising ect... But, that is good!!I see 7 people downloaded the appsettings.json file, I am curious on how the new module is working for everyone.

IReallyLikePizza2

Known around here

It’s working much better than the old module. As previously mentioned it requires much less CPU processing and as an added bonus it seems to have resolved the memory leakage I was experiencing.I am curious on how the new module is working for everyone.

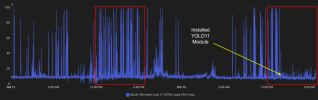

The AI model load is now on your iGPUIt’s working much better than the old module. As previously mentioned it requires much less CPU processing and as an added bonus it seems to have resolved the memory leakage I was experiencing.

Below is the inner working of the ALPRYOLO11 module

ALPRYOLO11 Module - High-Level Pipeline Description

Overview

The ALPRYOLO11 module is an Automatic License Plate Recognition (ALPR) system that detects and reads license plates from images using YOLO11-based ONNX models. The module integrates with CodeProject.AI Server and provides license plate detection, character recognition, state classification, and optional vehicle detection.

Architecture Layers

1. Entry Point Layer (alpr_adapter.py)

2. Configuration Layer (alpr/config.py)

3. Core Processing Layer (alpr/core.py)

Processing Pipeline

Stage 1: Image Input & Preprocessing

Stage 2: License Plate Detection (YOLO/plate_detector.py)

Stage 3: Plate Extraction & Transformation

Stage 4: State Classification (YOLO/state_classifier.py)

Stage 5: Character Detection (YOLO/character_detector.py)

Stage 6: Character Classification (YOLO/char_classifier_manager.py)

Stage 7: Character Organization (YOLO/char_organizer.py)

Stage 8: License Plate Assembly

Stage 9 (Optional): Vehicle Detection (YOLO/vehicle_detector.py)

Stage 10: Response Assembly

Key Components

ONNX Session Manager (YOLO/session_manager.py)

Base YOLO Model (YOLO/base.py)

Image Processing Utilities (utils/image_processing.py)

Hardware Acceleration

Priority order:

Debug Mode Features

When SAVE_DEBUG_IMAGES=True, generates:

Performance Characteristics

Data Flow Summary

Models Used

Configuration Parameters

Core Settings

Confidence Thresholds

Character Organization (Advanced)

Debug Options

Error Handling

The pipeline includes comprehensive error handling at each stage:

API Endpoint

Parameters:

Response:

ALPRYOLO11 Module - High-Level Pipeline Description

Overview

The ALPRYOLO11 module is an Automatic License Plate Recognition (ALPR) system that detects and reads license plates from images using YOLO11-based ONNX models. The module integrates with CodeProject.AI Server and provides license plate detection, character recognition, state classification, and optional vehicle detection.

Architecture Layers

1. Entry Point Layer (alpr_adapter.py)

- ALPRAdapter class extends CodeProject.AI's ModuleRunner

- Handles HTTP requests to /v1/vision/alpr endpoint

- Manages thread-safe request processing with locks

- Detects and configures hardware acceleration (CUDA, MPS, DirectML)

- Initializes the core ALPR system

- Tracks statistics (plates detected, histogram of license plates)

- Manages module lifecycle (initialization, cleanup, status reporting)

2. Configuration Layer (alpr/config.py)

- ALPRConfig dataclass loads settings from environment variables

- Configures feature flags (state detection, vehicle detection, debug mode)

- Sets confidence thresholds for all models

- Manages model paths and validates file existence

- Defines character organization parameters

- Validates all configuration values

3. Core Processing Layer (alpr/core.py)

- ALPRSystem orchestrates the complete detection pipeline

- Coordinates all detector and classifier components

- Manages the multi-stage processing workflow

- Handles debug image generation at each stage

- Converts results to API-compatible format

Processing Pipeline

Stage 1: Image Input & Preprocessing

Code:

HTTP Request → ALPRAdapter.process() → Image extraction → RGB to BGR conversion- Receives image from API request

- Converts PIL image to OpenCV numpy array (BGR format)

- Saves input debug image (optional)

Stage 2: License Plate Detection (YOLO/plate_detector.py)

Code:

Image → PlateDetector.detect() → Day/Night plate bounding boxes- Detects license plates using plate_detector.onnx model

- Returns separate lists for day plates and night plates

- Outputs bounding box corners for each detected plate

- Applies confidence threshold filtering

- Saves plate detection debug images (optional)

Stage 3: Plate Extraction & Transformation

Code:

Plate corners → four_point_transform() → Cropped & warped plate image- Uses 4-point perspective transform to extract plate region

- Corrects for viewing angle and skew

- Applies configured aspect ratio (default 4.0)

- Dilates corners by configured pixels (default 5px)

- Saves cropped plate to alpr.jpg

- Saves cropped plate debug image (optional)

Stage 4: State Classification (YOLO/state_classifier.py)

Code:

Plate image → StateClassifier.classify() → US state label- Only for day plates when enable_state_detection=True

- Uses state_classifier.onnx model

- Identifies US state from license plate design

- Returns state code and confidence score

- Saves state classification debug image (optional)

Stage 5: Character Detection (YOLO/character_detector.py)

Code:

Plate image → CharacterDetector.detect() → Character bounding boxes- Uses char_detector.onnx model

- Detects individual character locations on plate

- Returns bounding boxes for each character

- Applies confidence threshold filtering

Stage 6: Character Classification (YOLO/char_classifier_manager.py)

Code:

Character crops → CharClassifierManager.classify_characters() → Character labels- Uses char_classifier.onnx model

- Recognizes each character (A-Z, 0-9)

- Returns character label and confidence for each detection

- Supports multiple prediction alternatives

Stage 7: Character Organization (YOLO/char_organizer.py)

Code:

Character detections → CharacterOrganizer.organize() → Sorted character sequence- Critical stage for correct license plate reading

- Implements deterministic, stable multi-key sorting

- Handles multiple plate layouts:

- Single-line horizontal (e.g., "ABC1234")

- Multi-line plates (e.g., "ABC" over "1234")

- Vertical characters (e.g., "ABC123MD")

- Mixed layouts

- Overlapping characters

- Single-line horizontal (e.g., "ABC1234")

- Sorts purely by spatial coordinates (X, Y)

- Does NOT use YOLO detection order (which is arbitrary)

- Filters invalid characters by height threshold

- Generates reading order debug visualizations (optional):

- Numbered sequence image

- Arrow flow diagram

- Numbered sequence image

Stage 8: License Plate Assembly

Code:

Sorted characters → Concatenate → Final license plate string- Combines organized characters into final license number

- Generates top-N alternative readings

- Calculates overall confidence score

- Saves character detection debug image (optional)

Stage 9 (Optional): Vehicle Detection (YOLO/vehicle_detector.py)

Code:

Original image → VehicleDetector.detect_and_classify() → Vehicle make/model- Only when enable_vehicle_detection=True AND day plates detected

- Uses vehicle_detector.onnx and vehicle_classifier.onnx

- Detects vehicles in the image

- Classifies vehicle make and model

- Saves vehicle detection debug image (optional)

Stage 10: Response Assembly

Code:

All results → Format API response → Return JSON- Aggregates day plates, night plates, and vehicles

- Formats as CodeProject.AI compatible JSON

- Includes:

- License plate numbers

- Bounding boxes

- Confidence scores

- State information

- Top alternative readings

- Vehicle make/model (if enabled)

- Processing time metrics

- License plate numbers

- Updates module statistics

- Saves final annotated debug image (optional)

Key Components

ONNX Session Manager (YOLO/session_manager.py)

- Singleton pattern for managing ONNX Runtime sessions

- Handles DirectML fallback for Windows GPU acceleration

- Provides session reuse across requests

- Manages cleanup and resource deallocation

Base YOLO Model (YOLO/base.py)

- Abstract base class for all YOLO-based detectors

- Handles ONNX model loading and inference

- Provides common preprocessing and postprocessing

- Manages hardware acceleration (CPU, CUDA, MPS, DirectML)

Image Processing Utilities (utils/image_processing.py)

- Four-point perspective transformation

- Debug image generation with annotations

- Drawing utilities for bounding boxes and labels

Hardware Acceleration

Priority order:

- CUDA (NVIDIA GPUs) - Highest priority

- MPS (Apple Silicon) - macOS only

- DirectML (Windows GPUs) - Windows fallback

- CPU - Universal fallback

Debug Mode Features

When SAVE_DEBUG_IMAGES=True, generates:

- input_*.jpg - Original input images

- plate_detector_*.jpg - Plate detections with boxes

- plate_crop_*.jpg - Extracted plate regions

- state_classifier_*.jpg - State classification results

- char_detector_*.jpg - Character detections

- char_organizer_reading_order.jpg - Numbered character sequence

- char_organizer_character_flow.jpg - Arrow-based reading path

- vehicle_detector_*.jpg - Vehicle detections

- final_*.jpg - Complete annotated results

Performance Characteristics

- Thread-safe: Uses locks for concurrent request handling

- Stateful: Maintains statistics across requests

- Efficient: Reuses ONNX sessions for multiple inferences

- Configurable: Extensive environment variable configuration

- Robust: Comprehensive error handling and cleanup

Data Flow Summary

Code:

HTTP Request

↓

[ALPRAdapter] → Thread-safe processing

↓

[ALPRSystem] → Orchestration

↓

[PlateDetector] → Detect plates (day/night)

↓

[four_point_transform] → Extract plate region

↓

[StateClassifier] → Identify state (day plates only)

↓

[CharacterDetector] → Detect characters

↓

[CharClassifierManager] → Recognize characters

↓

[CharacterOrganizer] → Sort spatially (NOT by detection order)

↓

[Assembly] → Build license plate string + alternatives

↓

[VehicleDetector] → Detect & classify vehicle (optional)

↓

[Response] → JSON with all results

↓

HTTP ResponseModels Used

| Model | File | Purpose |

|---|---|---|

| Plate Detector | plate_detector.onnx | Detects license plates (day/night) |

| State Classifier | state_classifier.onnx | Identifies US state from plate design |

| Character Detector | char_detector.onnx | Detects individual character locations |

| Character Classifier | char_classifier.onnx | Recognizes characters (OCR) |

| Vehicle Detector | vehicle_detector.onnx | Detects vehicles (optional) |

| Vehicle Classifier | vehicle_classifier.onnx | Classifies vehicle make/model (optional) |

Configuration Parameters

Core Settings

- ENABLE_STATE_DETECTION - Enable/disable state identification (default: false)

- ENABLE_VEHICLE_DETECTION - Enable/disable vehicle detection (default: false)

- PLATE_ASPECT_RATIO - License plate aspect ratio (default: 4.0)

- CORNER_DILATION_PIXELS - Corner dilation for plate extraction (default: 5)

Confidence Thresholds

- PLATE_DETECTOR_CONFIDENCE - Plate detection threshold (default: 0.45)

- STATE_CLASSIFIER_CONFIDENCE - State classification threshold (default: 0.45)

- CHAR_DETECTOR_CONFIDENCE - Character detection threshold (default: 0.40)

- CHAR_CLASSIFIER_CONFIDENCE - Character recognition threshold (default: 0.40)

- VEHICLE_DETECTOR_CONFIDENCE - Vehicle detection threshold (default: 0.45)

- VEHICLE_CLASSIFIER_CONFIDENCE - Vehicle classification threshold (default: 0.45)

Character Organization (Advanced)

- LINE_SEPARATION_THRESHOLD - Multi-line detection threshold (default: 0.6)

- VERTICAL_ASPECT_RATIO - Vertical character threshold (default: 1.5)

- OVERLAP_THRESHOLD - IoU threshold for overlaps (default: 0.3)

- MIN_CHARS_FOR_CLUSTERING - Minimum chars for clustering (default: 6)

- HEIGHT_FILTER_THRESHOLD - Height ratio filter (default: 0.6)

- CLUSTERING_Y_SCALE_FACTOR - Y-coordinate scaling (default: 3.0)

Debug Options

- SAVE_DEBUG_IMAGES - Enable debug image saving (default: false)

- DEBUG_IMAGES_DIR - Debug images directory (default: "debug_images")

Error Handling

The pipeline includes comprehensive error handling at each stage:

- Model loading errors trigger initialization failures

- Individual plate processing errors are caught and logged without stopping the entire batch

- Invalid configurations are validated at startup

- Resource cleanup is guaranteed through destructors and cleanup methods

- Thread-safe operations prevent race conditions

API Endpoint

Code:

POST /v1/vision/alprParameters:

- image - Image file (required)

- min_confidence - Minimum confidence threshold 0.0-1.0 (optional, default: 0.4)

Response:

Code:

{

"success": true,

"processMs": 150,

"inferenceMs": 120,

"count": 1,

"message": "Found 1 license plates: ABC1234",

"predictions": [

{

"confidence": 0.85,

"is_day_plate": true,

"label": "ABC1234",

"plate": "ABC1234",

"x_min": 100,

"y_min": 150,

"x_max": 300,

"y_max": 200,

"state": "CA",

"state_confidence": 0.92,

"top_plates": [

{"plate": "ABC1234", "confidence": 0.85},

{"plate": "ABC1Z34", "confidence": 0.78}

]

}

]

}

Last edited:

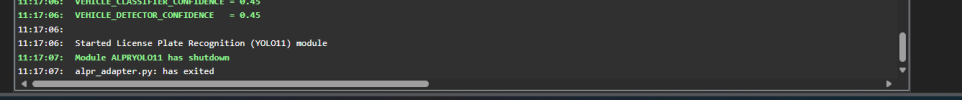

I downloaded the appsettings.json file and followed your instructions, but the new module will not stay running on my machine. I will get more details on the exact symptoms and errors this evening. I am away from the PC right now.I see 7 people downloaded the appsettings.json file, I am curious on how the new module is working for everyone.

This is exactly the behavior I am experiencing with my installation.Installed new module now it tries to start then shuts down. I am brand new to BI/CPAI

I think the issue is the Remote Display Adapter, do you have the Intel iGPU driver for the Intel UHD Graphic 630 GPU installed

View attachment 229940

The remote display adapter is present as I am using RDP to access my BI PC, as I assume 95% here are that run BI.I think the issue is the Remote Display Adapter, do you have the Intel iGPU driver for the Intel UHD Graphic 630 GPU installed

View attachment 229940

See below:

Server version: 2.9.5

System: Windows

Operating System: Windows (Windows 10 Redstone)

CPUs: Intel(R) Core(TM) i7-7700 CPU @ 3.60GHz (Intel)

1 CPU x 4 cores. 8 logical processors (x64)

GPU (Primary): Intel(R) HD Graphics 630 (1,024 MiB) (Intel Corporation)

Driver: 27.20.100.9664

System RAM: 32 GiB

Platform: Windows

BuildConfig: Release

Execution Env: Native

Runtime Env: Production

Runtimes installed:

.NET runtime: 9.0.0

.NET SDK: Not found

Default Python: Not found

Go: Not found

NodeJS: Not found

Rust: Not found

Video adapter info:

Intel(R) HD Graphics 630:

Driver Version 27.20.100.9664

Video Processor Intel(R) HD Graphics Family

System GPU info:

GPU 3D Usage 0%

GPU RAM Usage 0

Global Environment variables:

CPAI_APPROOTPATH = <root>

CPAI_PORT = 32168