I've been successfully running this YOLO11.NET module for a couple of days but with the various -v5 models (combined, dark and animal). It's fine, but statistically slower on average than the YOLO5.NET module on my i5-8500 machine: I was getting roughly 150ms times (long term average) on the older module, now seeing about 190ms on the newer module. Is this expected to improve once we see the fully native/optimized for YOLO11 models?

New CodeProject.AI Object Detection (YOLO11 .NET) Module

- Thread starter MikeLud1

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Are you using the YOLO11.NET module in Docker or Windows? Docker will be slower.I've been successfully running this YOLO11.NET module for a couple of days but with the various -v5 models (combined, dark and animal). It's fine, but statistically slower on average than the YOLO5.NET module on my i5-8500 machine: I was getting roughly 150ms times (long term average) on the older module, now seeing about 190ms on the newer module. Is this expected to improve once we see the fully native/optimized for YOLO11 models?

killerdan56

Getting the hang of it

The current version has the ipcam-combined-v5 model. I am hoping to finish training the YOLO11 models by end of the month.Any update on the combined model.

Just a conventional (bare metal) Windows 11 installation, no VM or Docker involved. I thought it was slightly odd to see the slower responses too, but questioned whether it might be due to the "non-optimized" models running under a newer branch of the newer underlying module. Not a huge difference, but slightly disappointing nevertheless.Are you using the YOLO11.NET module in Docker or Windows? Docker will be slower.

You can uninstall all the modules you are not using.dumb question but do you still need yolov5.net for everything else like object/face detection etc, or uninstall it once yolo11.net is in place?

i dont know if its related to that or not, i removed yolov5.net, so that only yolo11 was there, but now yolo11 wont stay started (not sure if it was before).. (it shows GPU)You can uninstall all the modules you are not using.

Getting

16:49:03:ObjectDetectionYOLO11Net.exe: Fatal error. 0xC0000005

16:49:03:ObjectDetectionYOLO11Net.exe: at Microsoft.ML.OnnxRuntime.InferenceSession.Init(Byte[], Microsoft.ML.OnnxRuntime.SessionOptions, Microsoft.ML.OnnxRuntime.PrePackedWeightsContainer)

16:49:03:ObjectDetectionYOLO11Net.exe: at Microsoft.ML.OnnxRuntime.InferenceSession..ctor(Byte[], Microsoft.ML.OnnxRuntime.SessionOptions)

16:49:03:System.__Canon, System.Private.CoreLib, Version=9.0.0.0, Culture=neutral, PublicKeyToken=7cec85d7bea7798e: ..ctor(System.String, Microsoft.ML.OnnxRuntime.SessionOptions)

16:49:03:ObjectDetectionYOLO11Net.exe: at CodeProject.AI.Modules.ObjectDetection.YOLO11.ObjectDetectorV5..ctor(System.String, Microsoft.Extensions.Logging.ILogger, Int32)

16:49:03:ObjectDetectionYOLO11Net.exe: at CodeProject.AI.Modules.ObjectDetection.YOLO11.ObjectDetectionYOLO11ModuleRunner.GetDetectorV5(System.String, Boolean)

16:49:03:ObjectDetectionYOLO11Net.exe: at CodeProject.AI.Modules.ObjectDetection.YOLO11.ObjectDetectionYOLO11ModuleRunner.DoDetectionV5(System.String, CodeProject.AI.SDK.Common.RequestFormFile, Single)

16:49:03:ObjectDetectionYOLO11Net.exe: at CodeProject.AI.Modules.ObjectDetection.YOLO11.ObjectDetectionYOLO11ModuleRunner.Process(CodeProject.AI.SDK.Backend.BackendRequ

Looks like just prior it tries to detect things (i havent gotten face / person detection to work since changing to v11):

16:52:51:Request 'custom' dequeued from 'objectdetection_queue' (...c91a2a)

16:52:51:Response rec'd from Object Detection (YOLO11 .NET) command 'custom' (...21f1a5) ['No objects found'] took 12838ms

16:56:55:Response rec'd from Object Detection (YOLO11 .NET) command 'custom' (...3f93fd) ['Found person'] took 12846ms

Taking a very long time, using built in GPU with 13500t

also, with just yolo11 installed, in BI do i want ipcam-combined,ipcam-combined-v5 etc (the v5s and the non v5s)? (looked back on some posts, i guess having them both listed is ok? v5 might be faster?)

EDIT: removed and reinstalled yolo11 object detection and now its showing CPU instead of GPU (this is how i have ylov5.net, is this what i ideally want here with just the 13500t cpu)

EDIT2: spoke too soon, it started up as CPU then switched to GPU and then crashed again, wont stay running I suppose to get v5.net back on , i have to revert the appsettings file?

**I dont have CUDA installed, do i need to?

These came up after i tried to trick it into CPU mode (which it held for a little while), by choosing wrong device id:

Unable to load the model at C:\Program Files\CodeProject\AI\modules\ObjectDetectionYOLO11Net\custom-models\license-plate.onnx

Unable to load the model at C:\Program Files\CodeProject\AI\modules\ObjectDetectionYOLO11Net\custom-models\ipcam-combined.onnx

EDIT: so far it hasnt crashed, and people detection IS working, license-plate or license-plate-v5 i cant get working, but appears this trick to get it going worked

Server version: 2.9.5

System: Windows

Operating System: Windows (Windows 11 24H2)

CPUs: 13th Gen Intel(R) Core(TM) i5-13500T (Intel)

1 CPU x 14 cores. 20 logical processors (x64)

GPU (Primary): Intel(R) UHD Graphics 770 (1,024 MiB) (Intel Corporation)

Driver: 31.0.101.3616

System RAM: 32 GiB

Platform: Windows

BuildConfig: Release

Execution Env: Native

Runtime Env: Production

Runtimes installed:

.NET runtime: 9.0.11

.NET SDK: Not found

Default Python: Not found

Go: Not found

NodeJS: Not found

Rust: Not found

Video adapter info:

Intel(R) UHD Graphics 770:

Driver Version 31.0.101.3616

Video Processor Intel(R) UHD Graphics Family

System GPU info:

GPU 3D Usage 6%

GPU RAM Usage 0

Global Environment variables:

CPAI_APPROOTPATH = <root>

CPAI_PORT = 32168

Last edited:

Hi Mike et al, Installation was smooth, currently I am running just this module with just "ipcam-combined-v5" model.

It's only been an hour and so far so good; did not miss any detection.

PC performance seems to be the same, I did not notice any deviation except inference time, from average 75ms it is now around 225ms, I will update after 24 hours.

Thanks for doing this, your contribution is very helpful.

It's only been an hour and so far so good; did not miss any detection.

PC performance seems to be the same, I did not notice any deviation except inference time, from average 75ms it is now around 225ms, I will update after 24 hours.

Thanks for doing this, your contribution is very helpful.

PeteJ

Pulling my weight

Update: After running for nearly 24 hours, no change to my above observation. Running smooth, no missed detection of person or vehicles. still inference is 3 times that of my previous setup with YOLOv5 6.2 using RTX 3050 GPU. Thanks to Mike and everyone.

That's pretty slow. To compare, I'm running on an Intel iGPU and my avg inference time is under 80ms.

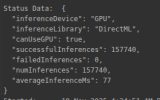

Post a screenshot of the dashboard, like the belowUpdate: After running for nearly 24 hours, no change to my above observation. Running smooth, no missed detection of person or vehicles. still inference is 3 times that of my previous setup with YOLOv5 6.2 using RTX 3050 GPU. Thanks to Mike and everyone.

Thank You!!! the log says "averageInferenceMs": 136" but those average includes lots of "No Object Found" at less than 20ms inference time. I have shared the screenshot above, when I average over a period of one hour for everything other than "No Object Found" it comes to that big number.That's pretty slow. To compare, I'm running on an Intel iGPU and my avg inference time is under 80ms.

View attachment 233110

Previously I was running YOLOv5 6.2 which used GPU(CUDA) and the average was less than 75ms and for "No Object Found" it was averaging 15ms

By the way, I am running Large Model.

Last edited:

Even before using this YOLOv11 I have always used only one Module and one model within that module. For each detection it processes 4 images. I have always used the BlueIris default of sending 1 pre-trigger image and 2 post-trigger image; I don't know why I see 4 lines instead of 3, but that is how it's been since I started using BI and CPAII might be wrong here but I think the multiple items per line means it's running through multiple models? I had that too until I wiped out everything and specified just one model.

I send 5 pre-images and 5 post-images 10 total and only get one detection per line [Found, truck] I don't think it has to do with the number of images it gets sent.Even before using this YOLOv11 I have always used only one Module and one model within that module. For each detection it processes 4 images. I have always used the BlueIris default of sending 1 pre-trigger image and 2 post-trigger image; I don't know why I see 4 lines instead of 3, but that is how it's been since I started using BI and CPAI

Probably I am doing something wrong, I changed the pre-trigger and post-trigger images to 3 each in BI and I see 7 entries in CPAI (Similar to the previous time, when total images is 3, there was 4 entries) and I will change to 5 each and see. I am pretty sure, it is going to have 11 entries in CPAI.I send 5 pre-images and 5 post-images 10 total and only get one detection per line [Found, truck] I don't think it has to do with the number of images it gets sent.

Oh I see the confusion.Probably I am doing something wrong, I changed the pre-trigger and post-trigger images to 3 each in BI and I see 7 entries in CPAI (Similar to the previous time, when total images is 3, there was 4 entries) and I will change to 5 each and see. I am pretty sure, it is going to have 11 entries in CPAI.

And maybe I'm only seeing one truck per detection because I only have one truck in the image.

Where you are getting [Found, Truck, Truck, Truck] are there three trucks in the image?

I get one line per picture sent like you are describing 10 lines in total, but in each line I only have [Found, Truck] presumably because I only have one truck in the image.

My mistake, I mistook your original comment thinking you are talking about number of lines; now I see, you are talking about multiple objects in the same line. Yes, other additional car or trucks are stationary, meaning, those are present in the path (parked) when new car or truck comes by. Then CPAI detects those and makes multiple entries in that one line. I don't know how to make CPAI to only report the new one that is arriving and to ignore the ones already stationed or parked.Oh I see the confusion.

And maybe I'm only seeing one truck per detection because I only have one truck in the image.

Where you are getting [Found, Truck, Truck, Truck] are there three trucks in the image?

I get one line per picture sent like you are describing 10 lines in total, but in each line I only have [Found, Truck] presumably because I only have one truck in the image.