The reason I am asking is because I just got a 5060 TI (previously had a 1070 that was working fine), and it is unsupported in the current version of CPAI.

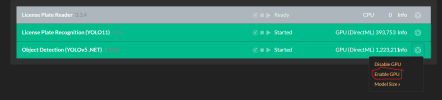

I have been running on docker and can not get CPAI to work with the 5060 ti. I tried running this yolo11 addon in my docker container but it did not work either, it acted as if it couldn't see the GPU even though nvidia-smi is functioning in the container (see system info output from CPAI below).

I think DirectML is only available on bare-metal windows?

I run

blue iris in a windows 11 VM on proxmox, and CPAI in docker in an ubuntu VM with GPU passed through.

Unfortunately I need the GPU passed through to the ubuntu VM otherwise I'd move it to the windows VM that BI is running on.

Just trying to figure out a way to get CPAI working again for Blue Iris with my new 5060 TI... unfortunately it's looking like I might just need to suck it up and move to Frigate..

Area of Concern [X ] Server Installer Server Dashboard or Explorer Installation issue of one or more Modules. Please post the issue on the module's Issue list directly Behaviour of one or more Modu...

github.com

Server version: 2.9.5

System: Docker (Ubuntu-Plex)

Operating System: Linux (Ubuntu 22.04 Jammy Jellyfish)

CPUs: AMD Ryzen 9 5950X 16-Core Processor (AMD)

1 CPU x 12 cores. 12 logical processors (x64)

GPU (Primary): NVIDIA GeForce RTX 5060 Ti (16 GiB) (NVIDIA)

Driver: 580.95.05, CUDA: 13.0 (up to: 13.0), Compute: 12.0, cuDNN: 8.9.6

System RAM: 59 GiB

Platform: Linux

BuildConfig: Release

Execution Env: Docker

Runtime Env: Production

Runtimes installed:

.NET runtime: 9.0.0

.NET SDK: Not found

Default Python: 3.10.12

Go: Not found

NodeJS: Not found

Rust: Not found

Video adapter info:

System GPU info:

GPU 3D Usage 13%

GPU RAM Usage 2.8 GiB

Global Environment variables:

CPAI_APPROOTPATH = <root>

CPAI_PORT = 32168