I have two open tickets on GitHub about the TPU. They've been open for many months with nothing but crickets. Others have open tickets and it's mostly the same. I believe that CPAI is on life support now and I really hope it can be revived as AI with

Blue Iris is a game changer.

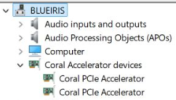

That Yeston card is bit of a unicorn. It's a single slot low profile card and it does not need external power and works fine with the 70W PCIe slot.. It idles around 4 watts and and seems to burst to around 30 watts when actively doing inference, and that is with the Huge model. Only note, that if you go down this path, you need to use CUDA 11.7 and CUDNN 8.9.7.29 for CPAI to work correctly (I spent many hours figuring that out).

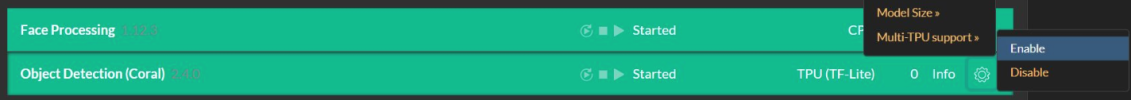

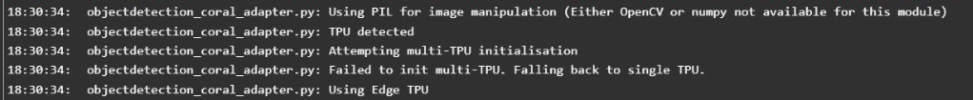

So the TPU is extremely low power but CPAI support is lacking and seems to be abandoned. I could not get it to work with License model, but it worked fine for Object and Face processing. Accuracy was "okay" compared to CPU. I had to lower the detection threshold from 85%+ down to 60%+ for the TPU to detect objects, though it's inference speed was much faster then CPU, 35ms for Small model and 250ms for Medium model vs 500-1500ms for CPU.

So using the TPU is better then the CPU if you don't need License support and it is exremely low power. The Nvidia GPU seems to be the best solution and it's also fairly low power. My TPU and daughter card cost about $90 total, the Yeston GPU about $205.

I wanted to really like the Coral TPU. In my head it ticked off all the boxes. If the TPU support was better, I wouldn't have even explored the GPU road. I hope CPAI picks up development again as it took Blue Iris to the next level.

Other hardware is Asrock DeskMini A300 with Ryzen 3 3200, 32Gb RAM. Real powerhouse!